CUDA Library

What is CUDA?

CUDA is Compute Unified Device Architecture. CUDA is a parallel computing platform and application on a programming model, application in the model which allows the software to use specific types of graphics processing units (GPU) for general-purpose method called general-purpose computing on GPUs (GPGPU). CUDA uses GPU for standard-purpose computing simple and elegant. CUDA is a software layer that gives direct access to the set of GPU’s virtual commands and parallel computational components, for the execution of computer kernels.

Origin of CUDA:

Ian Buck in 2003 & his team of researchers disclosed Brook, the first widely accepted model to extend C with data-parallel constructs. Ian Buck led the launch of CUDA after joining the NVIDIA in 2006, the first major commercial computer solution for common purpose computing on GPUs.

CUDA was developed by Nvidia for general computing in its GPUs (graphics processing units). CUDA enables developers to speed up computer intensive applications using GPUs capabilities in a parallelizable computational component.

CUDA Background :

Graphics cards are undoubtedly as old as the PC.

1981: Display Adapter a graphics card by IBM Monochrome.

1988: 16-bit 2D VGA Wonder card from ATI (company eventually acquired by AMD).

1996: Availibility for purchase a 3D graphics accelerator from 3dfx Interactive so that you could run the first-person shooter Quake at full speed.(video game)

1996: Nvidia competed with the 3D accelerator market with weak products

1999: introduced GeForce 256, the first graphics card (GPU).(for gaming purpose)

CUDA is designed to work with programming languages such as C, C++, and Fortran. GPU resources are very easy to use unlike previous APIs such as Direct3D and OpenGL, which required graphics programming advanced skills. CUDA-powered GPUs also support programming frameworks such as OpenMP, OpenACC and OpenCL, and HIP by integratig such code to CUDA.

CUDA programming:

Heterogeneous model is the model in which` CPU as well as GPU used and hense CUDA is one. In CUDA, the host refers to the CPU and its memory, while the device refers to the GPU and its memory. The host code run can manage memory of both the host and device and also activates the kernels which are functions perfumed on the device. These kernels are executed by multiple GPU threads in parallel.

Given the heterogeneous nature of the CUDA programming model, for a CUDA C program standard sequence of operations is as following :

- Declare and allocate both host and device memory.

- Initialize host data.

- Transfer data from the host to the device.

- Execute one or more kernels.

- Transfer results from the one device to the host.

CUDA Toolkit :

The NVIDIA CUDA Toolkit provides a development environment for creating high-performance (faster) GPU applications. With the CUDA Toolkit, you will develop, configure, optimize, and deploy applications on GPU-accelerated embedded systems, desktop workstations, enterprise data centers, cloud-based platforms, and HPC super computers. The toolkit includes GPU-accelerated libraries, debugging, compilers, integration tools and optimization tools, a C/C++ compiler, and a runtime library to build and deploy your application on major architectures including such as x86, Arm, and POWER. The CUDA toolkit is an additional software set over CUDA that simplifies GPU programming with CUDA. For example Nsight as a debugger (in Visual Studio). Scientists and researchers can build applications that measure from one GPU workstations to cloud installations with help of thousands of GPUs configurations and all available capabilities for distributing computations.

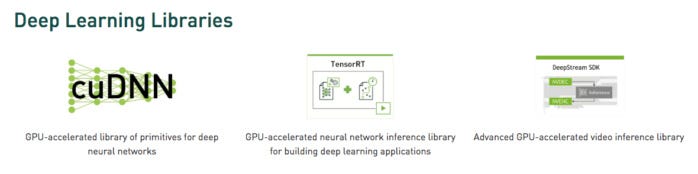

CUDA Libraries:

Deep learning libraries: In the deep learning field, there are three major GPU libraries: cuDNN, which I mentioned earlier as the part of GPU’s most efficient for open source deep learning frameworks. TensorRT, which Nvidia’s high-performance & most efficient deep learning inference optimizer and runtime and DeepStream, a video library. TensorRT helps you configure & optimize neural network models, measure low accuracy with high accuracy, and deploy the trained models to clouds, data centers, embedded systems, or automotive product platforms.

linear algebra and math libraries:Linear algebra supports tensor calculations and therefore the deep learning models. BLAS (Basic Algebra Subprograms), a set of matrix algorithms implemented in Fortran in 1989, has been used since then by scientists and engineers. cuBLAS is a faster GPU-accelerated version of BLAS. It is the very effective way to do matrix arithmetic with GPUs. cuSPARSE handles sparse matrices(less dence).

Signal, Video, Image processing libraries: The fast Fourier transform (FFT) is one of the basic algorithms used for signal processing. it turns a signal (similar to audio waveform) into a series (spectrum) of frequencies. cuFFT is FFT which is GPU-accelerated. Codecs, using standards such encode/compress and decode/decompress video for transmission and display. The Nvidia Video Codec SDK accelerates this process with GPUs.

parallel algorithm libraries: The 3 libraries for parallel algos have different purposes. NCCL (Nvidia Collective Communications Library) is for scaling applications across multi- GPUs and nodes. nvGRAPH belongs to parallel graph computations. Thrust is a CUDA C++ template library based on the C++ Standard Template Library. Thrust provides a high quality collection of data parallel primitives such as scanning, sorting, and reduction.

CUDA application domains:

- Computational finance

- Climate, weather, and ocean modeling

- Data science and analytics

- Deep learning and machine learning

- Defense and intelligence

- Manufacturing/AEC (Architecture, Engineering, and Construction): CAD and CAE (Computational structural mechanics, design and visualization, and electronic design automation)

- Media and entertainment (including animations, modeling, and rendering. compositing,finishing and effects, editing, encoding and digital distribution, on-air graphics and weather graphics)

- Medical imaging

- Oil and gas

- Research: Higher education and supercomputing (including high calculations in chemistry and biology, numerical analytics, physics, and scientific visualization)

- Safety and security

- Tools and management

References :